Every SQL Server database is mapped over a set of operating-system files. These files store data and log information. Individual files are used only by one database, and data and log information are never mixed in the same file. While data is stored in an MDF file, all transactions and the SQL Server database modifications made by each transaction are stored in an LDF file – a transaction log file which is an essential component of the database. Conceptually, the log file is a string of log records. Physically, the log records are stored in one or the set of physical LDF files that implement the transaction log

The primary purpose of an LDF file is to provide the ACID concept – Atomicity, Consistency, Isolation, and Durability

- atomicity: if one part of the transaction fails, the entire transaction fails, and the database state is left unchanged

- consistency: any transaction brings the database from one valid state to another

- isolation: the execution of concurrent transactions brings the database to a state as if the transactions were executed serially, one by one

- durability: once committed, the transaction remain so, even in the case of errors, power loss, or crashes

An LDF file stores enough information to replay or undo a change, or recover the database to a specific point in time. Therefore, due to various auditing or recovery requirements, there is often a need to open the LDF file and view its contents. But viewing LDF file content is not an easy task

There are several SQL Server functions and commands (e.g. fn_dblog, fn_dump_dblog, and DBCC PAGE) that potentially provide a way to view LDF file content. However, significant knowledge of T-SQL is required to use them, some are undocumented, and the results they provide are difficult to be converted to a human-readable format. Following are the examples of viewing LDF file content using SQL Server functions and commands:

-

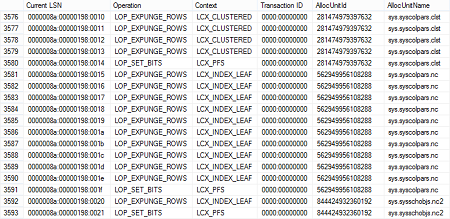

Here is an example using fn_dblog to read an online transaction log, with a result of 129 columns (only 7 shown here)

-

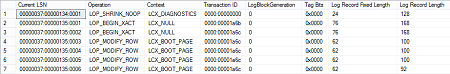

The fn_dump_dblog function is used to read transaction log native or natively compressed backups. The result is similar:

Unfortunately, no official documentation is available for fn_dblog and fn_dump_dblog functions. To translate the columns, you need to be familiar with the internal structure and data format, flags and their total number in a row data

-

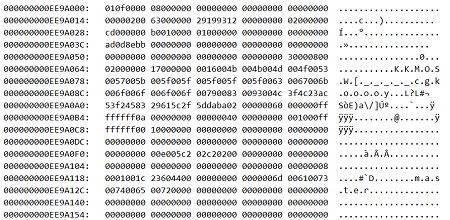

DBCC PAGE is used to read the content of database online files – MDF and LDF. The result is a hexadecimal output, which unless you have a hex editor, will be difficult to interpret

Using ApexSQL Log as a LDF file reader

ApexSQL Log is a SQL Server transaction log reader which reads online transaction logs, detached transaction logs and transaction log backups – both native and natively compressed. As an LDF viewer, it’s focused on operations (both DML and DDL, 45 in total), and what was changed by execution of these operations

Besides showing the logical content of an LDF file, ApexSQL Log also provides some additional features like creating Undo/Redo scripts, a row history for DML operations, and more

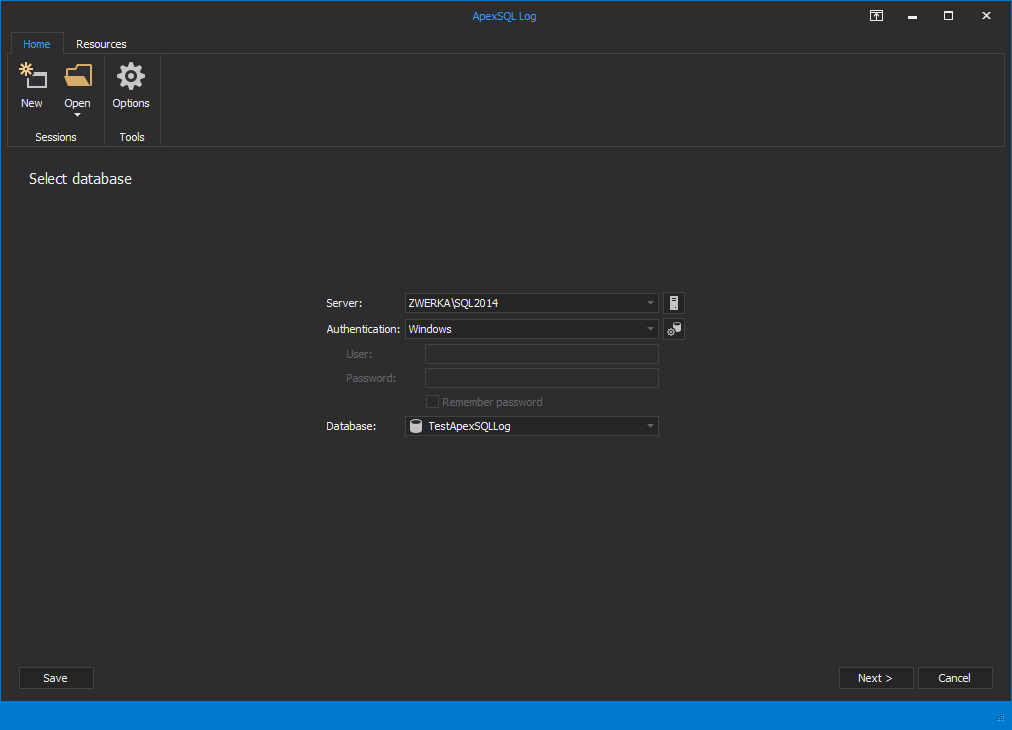

To open and view the LDF file using ApexSQL Log:

-

Connect to the database that the LDF file belongs to

-

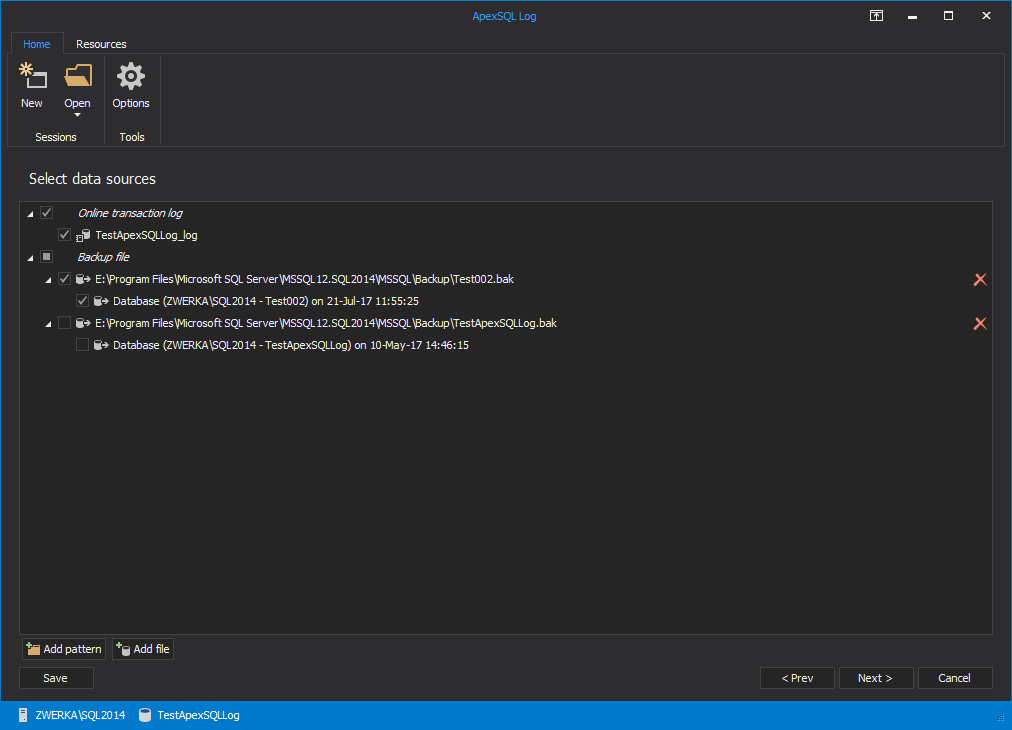

The next step is to add any LDF file backups and/or detached LDF files containing the information you need to view. Make sure they form a full log chain. A log chain is a continuous sequence of transaction log backups. It starts with a full database backup followed by all subsequent log backups up through the auditing point. If it becomes broken, only the transactions in the logs up to the last backup before the missing one can be shown with full information (e.g. a schema and object name, or a row history)

Unlike INSERT and DELETE operations, which are fully logged in the LDF files, UPDATE operations are logged minimally – only the changes that are made are logged, but the old and new values are not. When logging UPDATE operations, SQL Server doesn’t log complete before and after row states but only the incremental change that occurred to the row. For example, if a word “log” was updated to word “blog” SQL Server will, in general case, only log an addition of letter “b” at index 0. This is enough for its purpose of ensuring ACID but not enough to easily show before and after states of the row. So, in order to understand what changed really occurred, ApexSQL Log has to reconstruct the context in which the change occurred from the rest of transaction log and/or backup and online database data

To achieve this it deploys various, mutually complementary row state reconstruction techniques, one of which is reconstruction using the full log chain. Specifying the full log chain, together with the most recent full and differential backups, allows ApexSQL Log to reconstruct the UPDATE context starting from the updated row’s state at the time of the last backup and reconstructing all the operations that affected since then. In our example, ApexSQL Log would find the row’s state at the time of the last backup, see that the value of the field was “log” and from there conclude that the logged change of addition of “b” at index 0 has transformed the field value to “blog”

Moreover, a log chain enables reading the transactions quicker using just several transaction log backup files instead of a single online transaction log which can be multiple times bigger than all transaction log backups used. Additionally, a detached LDF file can be used as a reading source too – ApexSQL Log will analyze all provided sources in order to provide accurate information about transactions saved in LDF files

To do that use the Add file button at Select data sources step

-

Using the Database backups tab provide the full database backup which will be used as the starting point from which the full chain of transactions starts

-

Next, opt for the Open results in grid option – this will load auditing output into comprehensive grid suitable for further investigations.

-

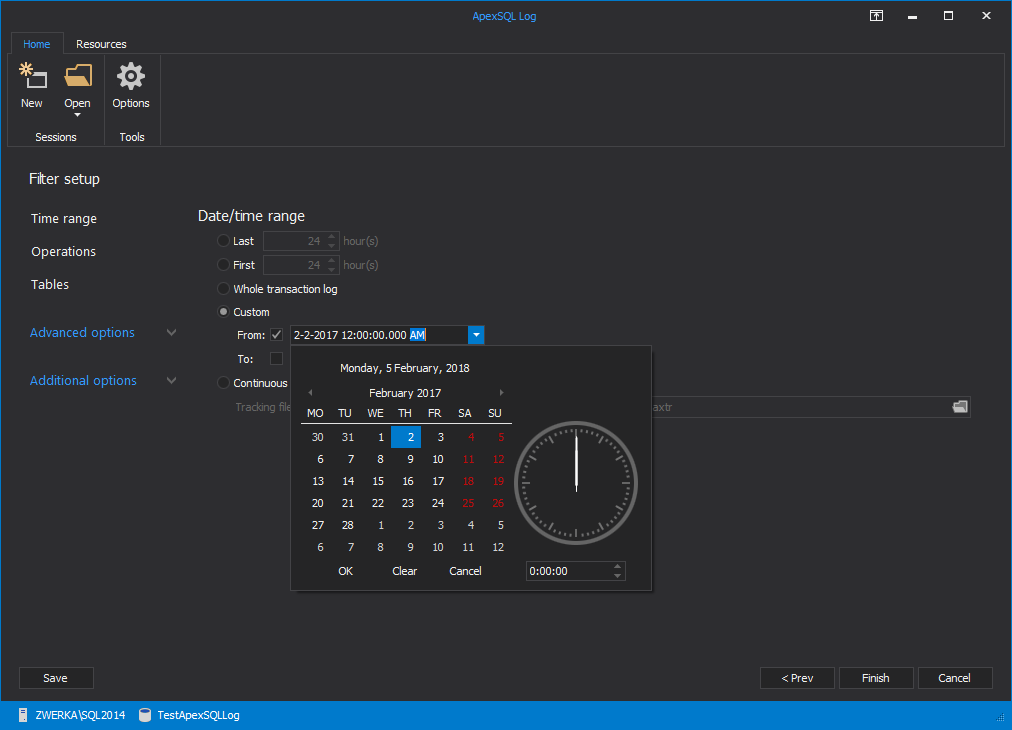

Using the Time range section in Filter setup options step, specify the time frame when the operations you are interested in were executed. This will speed up the process by narrowing down to the required operations

- When all this is set, use the Finish button to start the process of reading LDF files

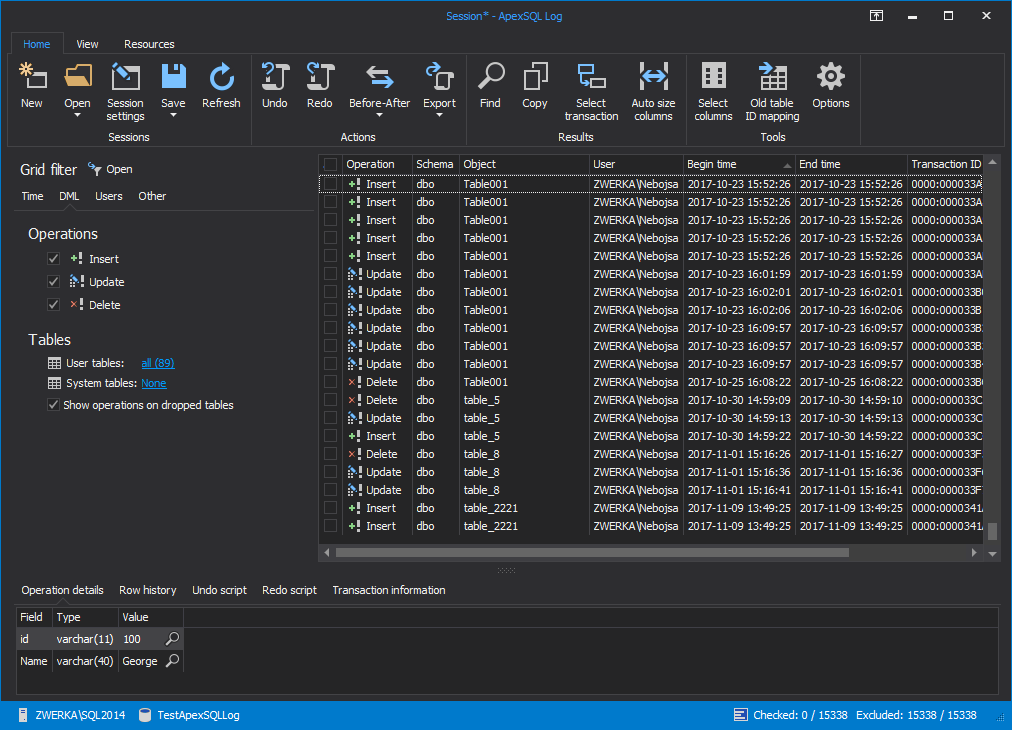

All transactions, according to the provided sources and set filters, will be shown in the ApexSQL Log’s main grid when the process finishes

Conclusion about the LDF file reader

As described, there are different ways to open an LDF file, and most of them do just that – opens it. It’s tricky to get any human readable information and make a use of it though

On the other hand, besides simply opening an LDF file, ApexSQL Log adds value to the process. You will be able to read transaction logs to see the operation type, the schema and object name of the object affected, the time when the operation was executed, the name of the user who executed the operation, and more

The main advantages of processing an LDF file with ApexSQL Log vs. just opening it are that it can:

- Show human readable information by linking and interpreting data (convert hex and binary values) from 129 native columns

- Chain different LDF files together into one

- Combine online, and backed up files (both database and LDF files), in order to provide more details on each transaction

- Fully reconstruct UPDATE operations, including the old (before) and the new (after) field value

- Show a complete row changes history for DML operations including the login of a user who performed each operation, and the time of transaction execution

- Pre-filter the information that will be read in order to save resources and speed up the process

- Mapping of old (dropped) table IDs to allow recovering data from the tables that no longer exist

- Process and display an LDF file content without requiring T-SQL scripting knowledge

Beyond reading, ApexSQL Log also provides:

- Grid sorting, grouping, filtering, and advanced searching enables you to manipulate the data in a myriad of ways

- In addition to rendering in a grid – ApexSQL Log can convert data to CSV, HTML, XML, or SQL and help you manage the export, and save the grid content for later analysis

- Creation of SQL scripts to rollback or replay SQL Server transactions – this can be useful for a database recovery scenario when a missing data needs to be restored, or a data/schema change needs to be rolled back, without doing a full database restore

- Official documentation for all features

- Technical support, and more

September 18, 2013