Introduction

In a scenario where SQL audit data requires special processing, reviews, and analysis, it can be accomplished by designing an in-house custom application to read the data and provide desired output and visualization. Since the ApexSQL Audit central repository database uses a proprietary design with encryption applied, a custom database will have to be used to provide data to the said in-house application.

ApexSQL Audit API can be used to retrieve the SQL audit data in raw form (formatted in most capable JSON format) and populate a custom database with it. The final problem that remains is how to ensure that the custom database always has the latest data imported. This is best resolved with an automated data retrieval method which can be scheduled with the desired frequency, to retrieve data from the repository and import it into a custom database.

Such a solution can be easily designed with a Windows PowerShell script and this article will show an example of how should such a script work.

Custom database

The design of the 3rd party database, used for the custom application, depends on the requirements of that application. However, the basic example of the table that can be used to store the data extracted with the PowerShell API call would look like this:

CREATE TABLE [dbo].[report] ( [ID] [int] IDENTITY(1, 1) NOT NULL ,[Access list] [varchar](max) NULL ,[Application] [varchar](40) NULL ,[Client host] [varchar](20) NULL ,[Database] [varchar](20) NULL ,[Date] [datetime] NULL ,[Event type] [varchar](20) NULL ,[Internal event type] [varchar](20) NULL ,[Login] [varchar](20) NULL ,[Object] [varchar](20) NULL ,[Operation] [varchar](20) NULL ,[Schema] [varchar](20) NULL ,[Server] [varchar](20) NULL ,[State] [varchar](10) NULL ,[Text data] [varchar](max) NULL ,CONSTRAINT [PK__ID__A2CF65630E6B9C22] PRIMARY KEY CLUSTERED ([ID] ASC) )

The table design is proposed based on all details that are available for each event within the SQL auditing data. When the table is ready, it is time to utilize the PowerShell and populate it.

Automation script

The PowerShell script that can be used to populate a SQL table can be designed differently, based on requirements. The basic principle that will be respected with the example in this article consists of three phases:

- Read out the SQL audit data using the API call

- Create the SQL query to insert the data

- Run the query against the target database

For the part where a query is run, we will create a PowerShell function that will use the target SQL Server name, target database, SQL credentials, and the PowerShell object that contains SQL commands as parameters. This means that with this function, we can just change target parameters and run the query against a different target if necessary.

The function should look like this:

Function Add-APIData ($server, $database, $query, $SQLCredentials) { $SQLConnection = New-Object System.Data.SqlClient.SqlConnection $SQLConnection.Credential = $SQLCredentials $SQLConnection.ConnectionString = "SERVER=$server;DATABASE=$database;Integrated Security=true" $SQLCommand = New-Object System.Data.SqlClient.SqlCommand $SQLCommand.Connection = $SQLConnection $SQLCommand.CommandText = $text $SQLCommand.CommandTimeout = 0 $SQLConnection.Open() $SQLCommand.ExecuteNonQuery() $SQLConnection.Close() $SQLCommand.Dispose() $SQLConnection.Dispose() }

This function will establish a connection to the target SQL Server and the custom database, using given credentials and execute the query constructed with the main part of the automation script. Now, the credentials part is usually resolved by adding this command line in the script body:

$SQLCredentials = Get-Credentials

But this will prompt for credentials entry every time the script is executed, which defies the purpose of automation. Instead of that, a technique described in the PowerShell encrypt password techniques for SQL Server article can be used to save credentials in encrypted form and use them with every execution.

With the function defined it is time to move to the main part of the script. The first thing to do is to define SQL Server name and database name for the target, i.e. the custom database:

$SQLServer ='Server Name' $DatabaseName ='Database Name'

Next, we can proceed with pulling the data from the SQL audit data repository. These commands will run this job:

$WebCall = New-Object System.Net.WebClient $WebCall.Credentials = Get-Credential $result = $WebCall.DownloadString("https://Q4V4Y6Y2:49152/report/data?name=Q4V4Y6Y2%5cMilan%2fMonthly+report&last=31:0:00:00&page=1&eventsPerPage=100000")

The „DownloadString“ argument in the last command is the URL needed to run the API call and retrieve the SQL audit data. This string is generated from the ApexSQL Audit application and instructions on how to do that are available in the article: Using API to generate SQL auditing reports

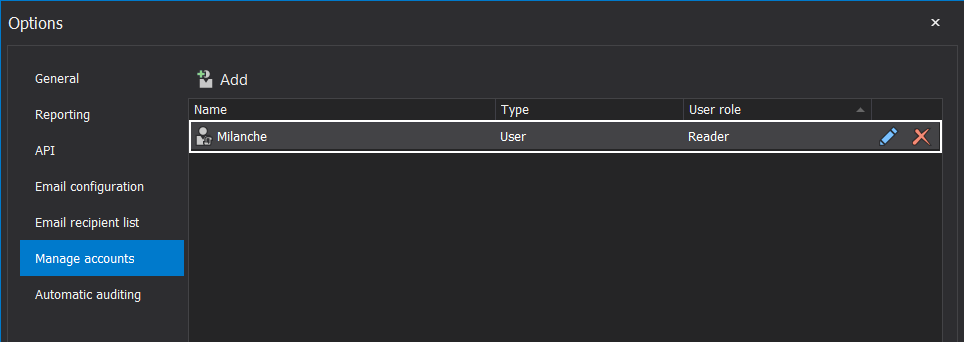

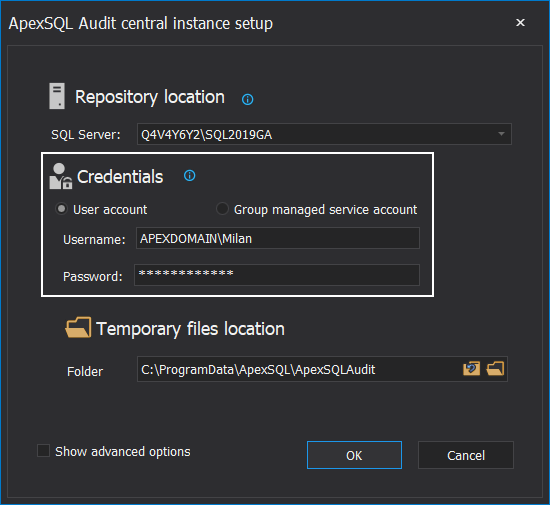

We can see that the command $WebCall.Credentials = Get-Credential Credential is used, and the command will prompt for the ApexSQL Audit access credentials. Those credentials are referring to the accounts dedicated to the ApexSQL Audit access:

Note that it is implied that the account used for the ApexSQL Audit administration is also a valid access account for the API calls:

Again, prompting for credentials disables the automation purpose, so instead of using this command, repeat the same procedure to save credentials as for the SQL credentials.

If the SQL audit reporting API call was run, it is time to create the SQL script that will populate the target table with the data. The following commands are required for the SQL script creation:

$result = ConvertFrom-Json $result $SQLScript = @() foreach ($r in $result.Events) { if ($r.'Text data'.Contains('''')) { $r.'Text data'=$r.'Text data'.Replace('''','"') } $SQLScript += "INSERT INTO dbo.report VALUES ('" + $r.'Access list' + "','" + $r.Application + "','" + $r.'Client host' + "','" + $r.Database + "','" + $r.Date + "','" + $r.'Event type' + "','" + $r.'Internal event type' + "','" + $r.Login + "','" + $r.Object + "','" + $r.Operation + "','" + $r.Schema + "','" + $r.Server + "','" + $r.State + "','" + $r.'Text data' + "')" + $nl }

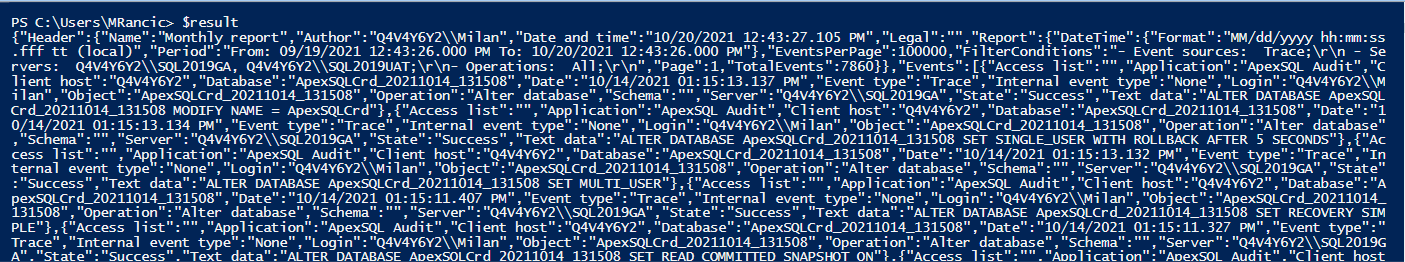

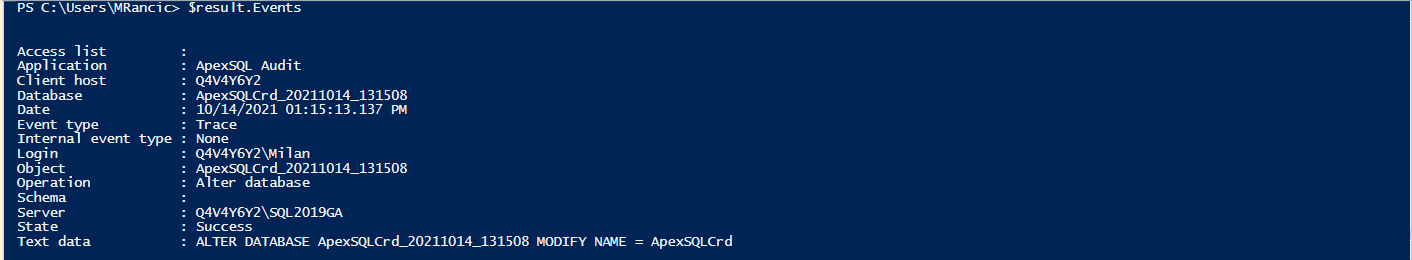

Since the data is streamlined in JSON format, the command $result = ConvertFrom-Json $result will convert the JSON records into a PowerShell object array. In other words, it will convert data output from this form:

To this form:

And now we can convert those records into standard SQL INSERT values which this part of the commands listed above will do:

$SQLScript += "INSERT INTO dbo.report VALUES ('" + $r.'Access list' + "','" + $r.Application + "','" + $r.'Client host' + "','" + $r.Database + "','" + $r.Date + "','" + $r.'Event type' + "','" + $r.'Internal event type' + "','" + $r.Login + "','" + $r.Object + "','" + $r.Operation + "','" + $r.Schema + "','" + $r.Server + "','" + $r.State + "','" + $r.'Text data' + "')" + $nl

We can see that the list of values corresponds to the columns in the table example we used at the beginning, so if a different set of columns is used for the table, the matching set of values should be used.

One problem that might occur when using the query text data as the INSERT value is that the text can contain the apostrophe character which will not work with the INSERT command. This is where we used the following clause:

if ($r.'Text data'.Contains('''')) { $r.'Text data'=$r.'Text data'.Replace('''','"') }

The clause will examine query text data strings and will replace apostrophe characters with double quotes which is fine for the INSERT command. This clause can be used for similar transformations.

The final command will run the created function, establish the SQL Server and database connection, and run the INSERT script:

Add-APIData -server $SQLServer -database $DatabaseName -text $SQLScript -SQLCredentials $SQLCredentials

We can see that, for the arguments in this function, the values that we stored in the script body were used.

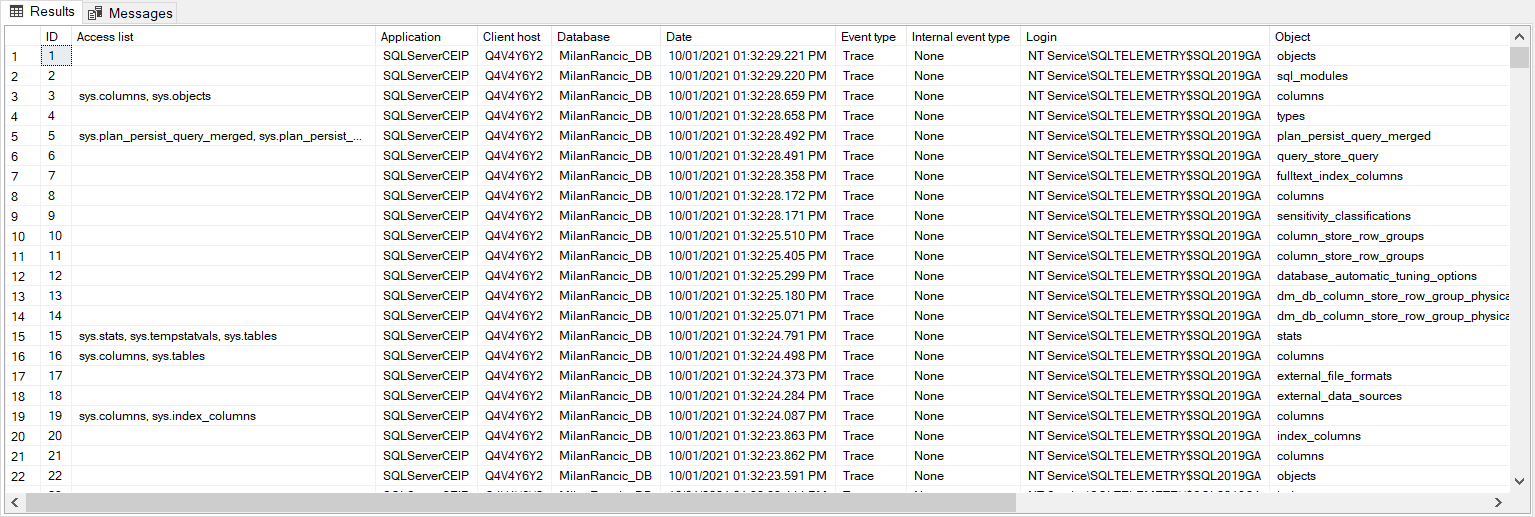

If the script was run manually we can open SSMS and with the query SELECT * FROM [dbo].[report] we can verify that the table has been populated:

The entire PowerShell script would look like this:

-

The save password part (which should be run only once in a separate script):

#Type the SQL account password to save it for automation Read-Host -AsSecureString |ConvertFrom-SecureString |Out-File C:\Credentials\SQLPassword.txt #Type the ApexSQL Audit account password to save it for automation Read-Host -AsSecureString |ConvertFrom-SecureString |Out-File C:\Credentials\ApexSQLAuditPassword.txt

-

The automation script:

#Define the function that establishes the SQL connection and runs the script Function Add-APIData ($server, $database, $query, $SQLCredentials) { $SQLConnection = New-Object System.Data.SqlClient.SqlConnection $SQLConnection.Credential = $SQLCredentials $SQLConnection.ConnectionString = "SERVER=$server;DATABASE=$database;Integrated Security=true" $SQLCommand = New-Object System.Data.SqlClient.SqlCommand $SQLCommand.Connection = $SQLConnection $SQLCommand.CommandText = $text $SQLCommand.CommandTimeout = 0 $SQLConnection.Open() $SQLCommand.ExecuteNonQuery() $SQLConnection.Close() $SQLCommand.Dispose() $SQLConnection.Dispose() } #Set accounts for script automated runs $SQLAccount = 'Account name' $ApexSQLAccount = 'Account name' $EncryptedSQLPass = Get-Content -Path "C:\Credentials\SQLPassword.txt" $EncryptedApexSQLCPass = Get-Content -Path "C:\Credentials\ApexSQLAuditPassword.txt" $SQLCredentials New-Object System.Management.Automation.PSCredential -ArgumentList $SQLAccount, (ConvertTo-SecureString $EncryptedSQLPass) #SEt the target where the SQL script will be run $SQLServer = 'SQL Server name' $DatabaseName = 'Database name' #Run the Web API call $WebCall = New-Object System.Net.WebClient $WebCall.Credentials = New-Object System.Management.Automation.PSCredential -ArgumentList $ApexSQLAccount, (ConvertTo-SecureString $EncryptedApexSQLPass) $result = $WebCall.DownloadString("https://Q4V4Y6Y2:49152/report/data?name=Q4V4Y6Y2%5cMilan%2fMonthly+report&last=31:0:00:00&page=1&eventsPerPage=100000") #Convert the JSON data to PowerShell obect array $result = ConvertFrom-Json $result #Populate the required script with the INSERT values and convert values where necessary $SQLscript = @() foreach ($r in $result.Events) { if ($r.'Text data'.Contains('''')) { $r.'Text data'=$r.'Text data'.Replace('''','"') } $SQscript += "INSERT INTO dbo.report VALUES ('" + $r.'Access list' + "','" + $r.Application + "','" + $r.'Client host' + "','" + $r.Database + "','" + $r.Date + "','" + $r.'Event type' + "','" + $r.'Internal event type' + "','" + $r.Login + "','" + $r.Object + "','" + $r.Operation + "','" + $r.Schema + "','" + $r.Server + "','" + $r.State + "','" + $r.'Text data' + "')" + $nl } #run the function Add-APIData -server $SQLServer -database $DatabaseName -text $SQLScript -SQLCredentials $SQLCredentials

Scheduling

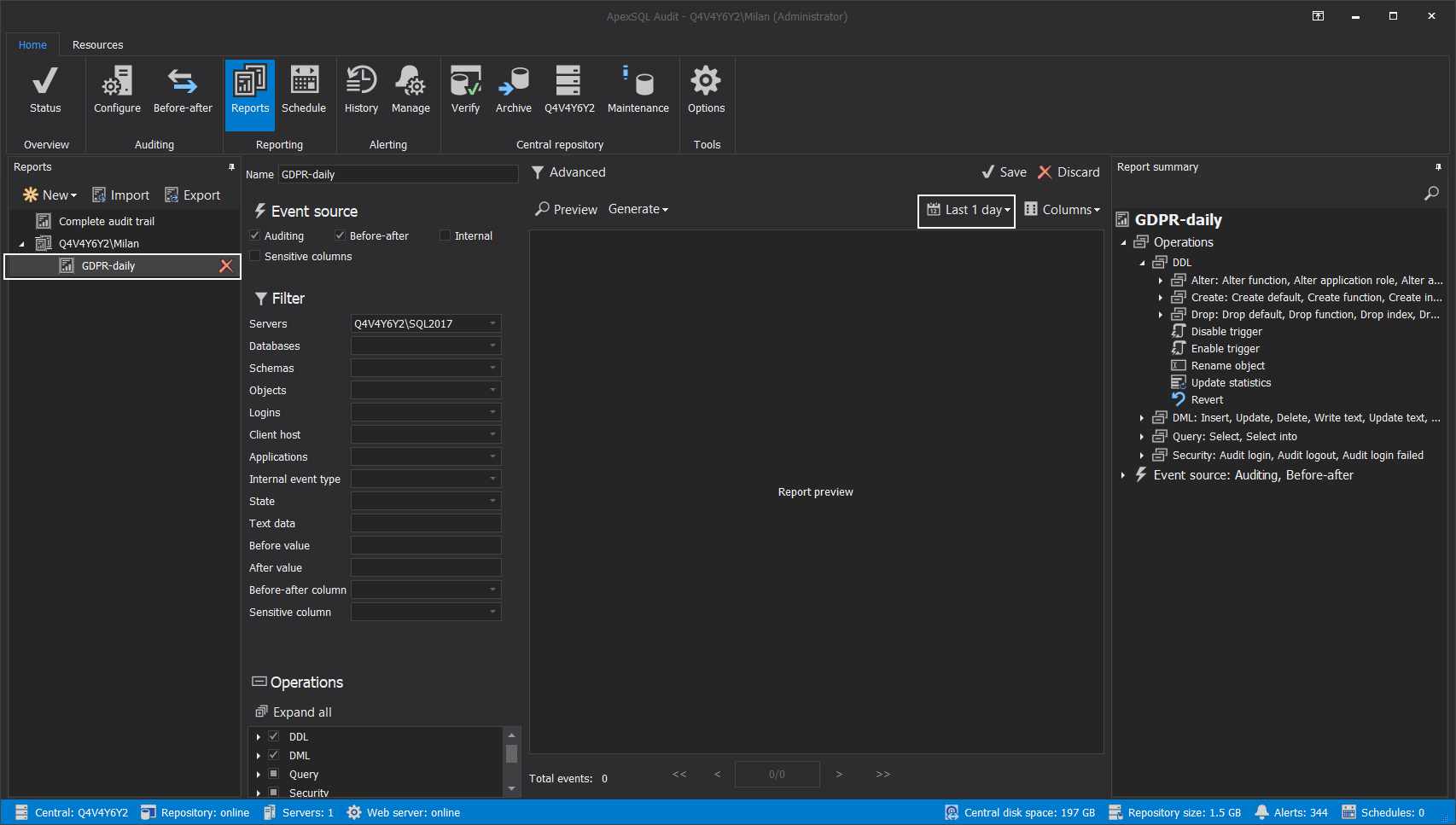

The PowerShell script should be saved as a standard .ps1 file and any scheduler can be used to run it at the desired frequency. Native methods include the Windows Task Scheduler and SQL Agent jobs. The frequency should be set according to the individual needs, i.e. to keep the data set as fresh as needed. Note that the SQL audit report definition created with the ApexsSQL Audit, that the API call will be referring to, will have to be configured with the time range that matches that exact frequency to avoid duplicate records in the custom database. For example, if we need daily retrieval frequency, the report definition should be configured to take the last 1 day of records:

Conclusion

There are already available popular tools for data analysis, like Excel or Power BI that can be used with the ApexSQL Audit API, but if a custom solution is used, including a custom database to work with this solution, the best way to keep the analysis accurate is to automate the SQL audit data retrieval.

December 15, 2021