Depending on your particular environment, database reporting can have a heavy impact on the database performance, can execute queries which run for dozens of minutes or both. This is usually the case with reports which require complex queries having multiple calls to SQL Server’s aggregate functions to be executed against very large data sets. The effect on the database performance is particularly severe in scenarios where the production database stores data which is changed often – as data modification operations require exclusive locks, in order to preserve the integrity of the database, SQL Server will go ahead with the data modification operation until the query initiated by the report is still running. This increases the chances of a deadlock occurring; especially in cases where another set of data modification instructions, dependent on the ones which are waiting for the reporting to finish has already been applied. Therefore, reporting can cause performance degradation in a production environment. So, how can reporting be optimized to prevent such a heavy load on production databases?

There are several approaches which can help to handle heavy-duty reporting. All of them require different levels of proficiency with SQL Server and some of these are quite expensive. One of the most popular approaches is utilizing an OLAP database for reporting purposes

The databases most businesses use to store their business data are called Online Transaction Processing (OLTP) databases as their primary function is to perform transactions over the stored data (i.e. insert, update or delete it). On the other hand Online Analytical Processing (OLAP) databases differ from the OLTP ones in their structure which is primarily based on data redundancy and the fact that the data in an OLAP database never changes. The primary purpose of OLAP databases is to make the gathering and analysis of data much simpler, quicker and easier. As OLAP and OLTP databases are isolated by default, this will negate the direct performance impact of heavy reporting on the production, OLTP databases as they won’t be queried at all

This approach however, does come with certain caveats. OLAP databases require additional administration and planning; but first and foremost requires trained staff in an additional set of technologies and concepts such as multidimensional data or MDX queries as gathering data from OLAP databases is quite different than doing so form a traditional OLTP database and is in most cases, outside the scope of the core DBA and database developer responsibilities

An additional consideration from the performance standpoint is the model under which the data from the OLTP database will be moved to the OLAP one. Namely, in Relational OLAP (ROLAP) no data is stored directly in the multidimensional database; rather, it’s loaded from the relational database as needed – although this doesn’t affect the OLTP database’s performance as much as a straight up report would it still impacts it. On the other hand, in the Multidimensional OLAP (MOLAP) model, the data is loaded into the multidimensional database and cached there; future queries are run against the cached data. Although this is a faster approach, its price lies in the additional disk space required for the cached data. Regardless of the chosen model, in boils down to this: using an OLAP database always costs additional disc space as most of the data in it will be redundant to the data in the live, production database

A simpler and very popular solution to the performance problems caused by heavy reporting is running the reports against a restored full database backup of the production database. This will take the load off the production database, but will result in creating new MDF and LDF files which will be the same size as the production ones. Therefore, depending on the size and the contents of the production database, the process of setting up reporting this way might take a huge chunk of disk space and be pretty slow since the database backup needs to be fully restored before reports can be ran. This is where ApexSQL Restore can help

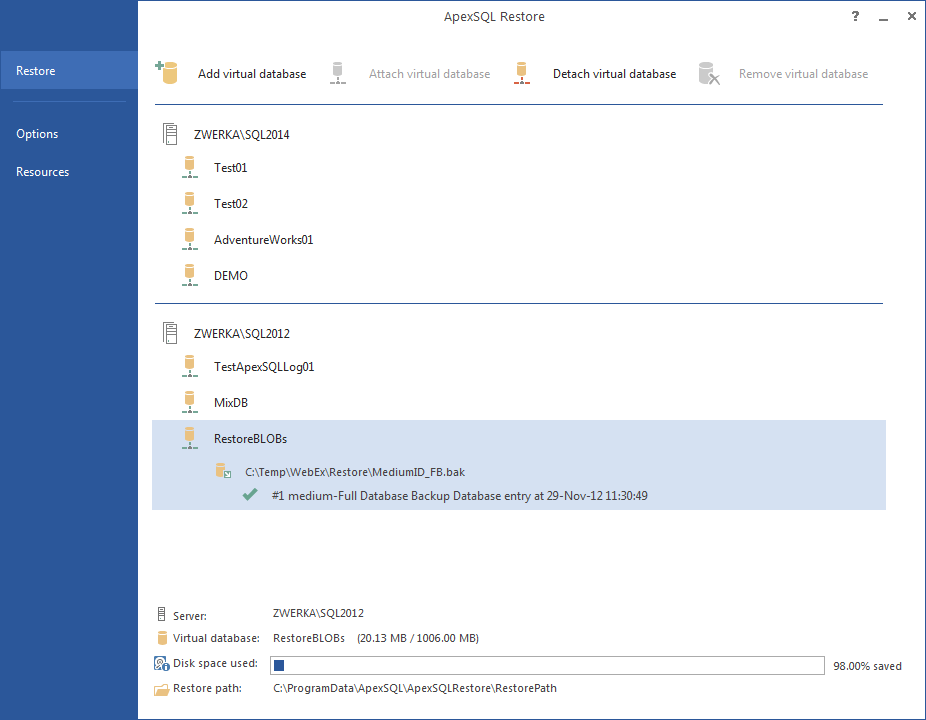

ApexSQL Restore attaches both native and natively compressed SQL database and transaction log backups as live databases, accessible via SQL Server Management Studio, Visual Studio or any third party tool. It allows attaching single or multiple backup files including full, differential and transaction log backups, making it ideal for continuous integration environments, quickly reverting inadvertent or malicious changes and balancing heavy report loads. For more information, visit the ApexSQL Restore product page

To use ApexSQL Restore in order to alleviate the performance impact made by reporting:

- Take a full database backup of the production database

- Start ApexSQL Restore

- Click the Add a virtual database button

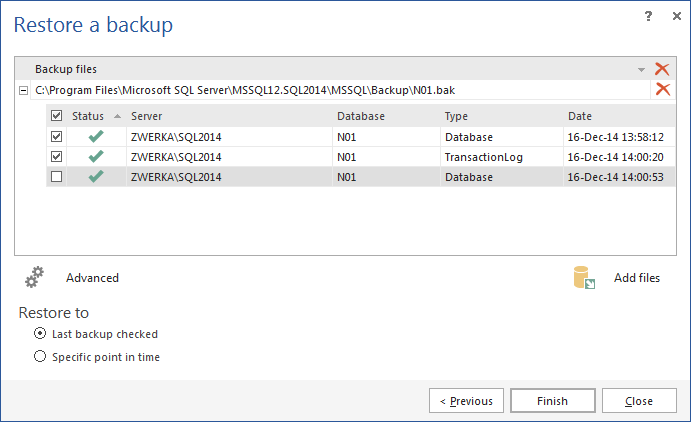

- In the Restore a backup dialog that will appear provide:

- The name of the SQL Server instance the backup will be attached to

- The preferred authentication method; to use SQL Server authentication a set of valid SQL Server credentials needs to be provided

- The name of the database the backup will be virtually restored to

- Click Next

- To specify the backup to be attached click Add file(s)…, navigate to the backup file and click Open

- Review the backup properties and select the appropriate checkboxes next to each backup you want to attach

- To attach a different file click Remove file and repeat the process described in step 5.

- To specify a non-default restore path click Advanced >> and specify the path

- Click Finish to attach the backup

- Run the report against the mounted virtual database

In summary, there are several different solutions to the problems caused by the performance impact made by heavy reports – but if you’re not willing to invest additional time, personnel, training, time or disk space to solve them ApexSQL Restore might be the tool for you

April 4, 2013