ApexSQL Log is a SQL log analyzer tool which can be used to read different transaction log files in order to perform SQL Server database auditing, transactional replication and data/schema recovery tasks.

- Auditing – with ApexSQL Log, we can look into not only the online transaction log file, but also fully investigate transaction log backup files (.trn, .bak) as well as detached transaction log files (LDF). Our SQL log analyzer allows us to not only store and export audited results in various formats, but also provides investigation mechanisms which allow users to directly view transaction log files contents and analyze it within the ApexSQL Log grid itself maximizing potential output and results

- Recovery – for ApexSQL Log may seem effortless, since our SQL Log analyzer can quickly and with minimal effort roll back all inadvertent changes or lost data/structure which cannot be recovered using conventional mechanisms or SQL Servers own tools. ApexSQL Log creats “Undo” or rollback scripts which include roll-back SQL operations and will return the data or structure in the previous state

- Replication – is another feature of ApexSQL Log which proved invaluable to SQL users faced with replication tasks. Our SQL log analyzer uses data audited from the transaction log files in order to generate “Redo” or roll-forward scripts which will completely mimic DML and DDL operations executed on the audited database. These scripts can then be executed over one or multiple databases in different SQL requirements in order to replay exact data and schema changes

In this article, we are going to showcase how to configure and achieve database auditing, DDL/DML recovery and transactional replication using our SQL log analyzer – ApexSQL Log.

Auditing

Any SQL Log analyzer must have powerful, top-notch auditing features which allow auditing, export and in-depth investigation transaction log files. ApexSQL Log is a SQL Server transaction log reader which audits DDL and DML operation/changes with plethora of output choices and investigation features.

-

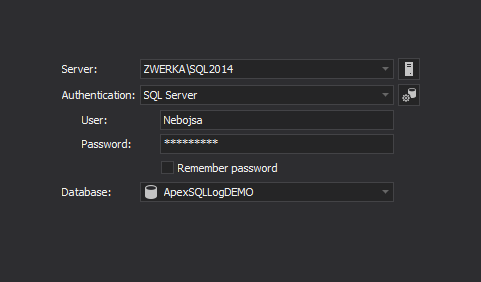

To start the auditing process, the first step in ApexSQL Log is to choose the SQL Server instance, select authentication method and provide valid credentials, and select a database to audit

-

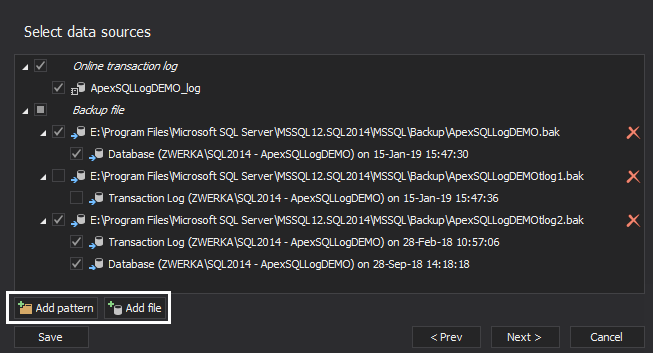

In the second step of the wizard, application will automatically locate and add online transaction log file, while additional transaction log files, including backups and detached LDF files can be added manually using “Add file” and “Add pattern” buttons

-

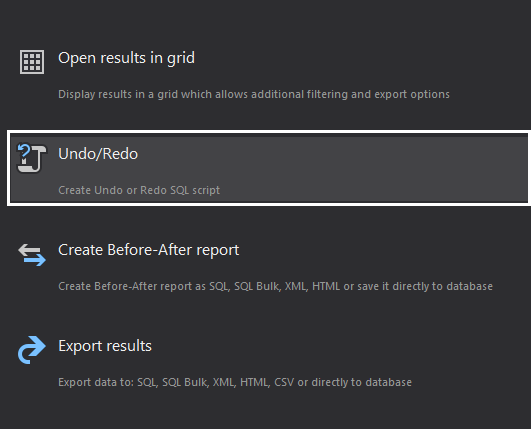

In the “Select output” step of the wizard, ApexSQL Log offers several auditing choices:

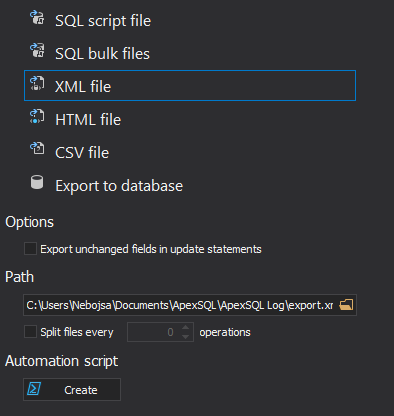

- Export results

- Create before-after report

- Open results in grid

Exporting auditing results option will result in audited report being generated directly inside specified repository tables, or an auditing report will be created in one of the supported file formats (SQL, SQL bulk, XML, HTRML, CSV), depending on the user’s preference.

The “Create before-after report” option functions in a similar manner as previously mentioned “Export results” option – a report is created in specified format, based on the user’s choice, but in this case audited information and details will be centered on the before and after values on DML changes only. The before-after reports use different repository tables than those generated via “Export results” option.

The most diverse auditing option of all in SQL Log Analyzer is “Open results in grid”. When this option is used, ApexSQL Log will populate a highly comprehensive grid with the audited information which allows users direct insight into the contents of their log files. Grid-sorted results can there be further filtered, investigated in great details, both for operation details and history of changes for specific table rows and more. Both previously mentioned options can also be used to export results from the grid once the forensics and investigation has been completed.

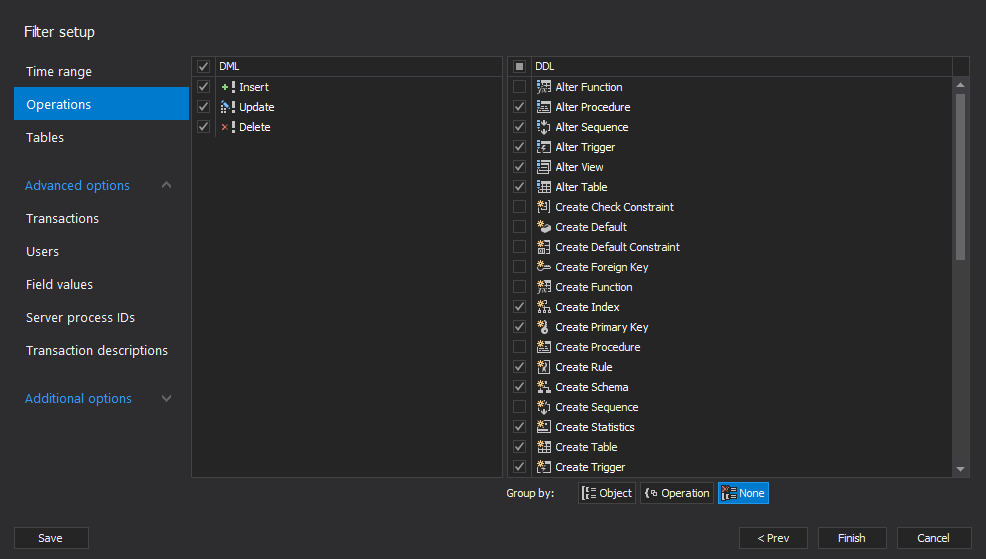

- Back to the auditing wizard, once the choice on auditing output has been made, ApexSQL Log will offer various filters in the “Filter setup” step. These filters can be used to fine-tune the auditing trail and ensure generated results hold high auditing value. Filters include but are not limited to are time-based, operations, tables, users, values, server process IDs and more

-

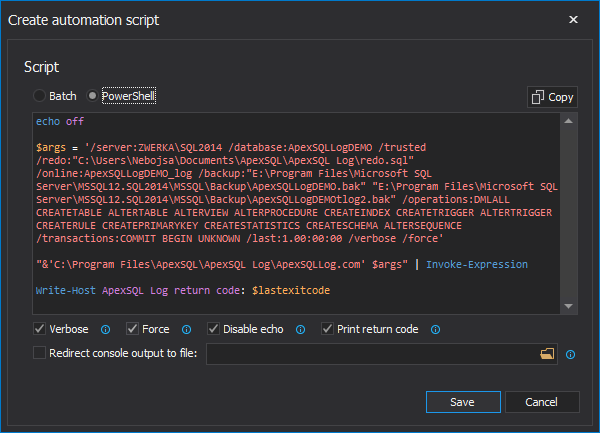

In the event of immediately generating reports with Export reports and Create before-after reports, in the final step of the wizard, users will get to choose export output and details. Additionally, ApexSQL Log can be used to create an automation script based on the wizard configuration which can be used to automate future auditing jobs using batch or PowerShell. Completing this step will finalize auditing job and auditing report will be generated in the specified format.

-

In cases when Open results in grid option is selected, ApexSQL Log will populate above mentioned grid with the auditing results, and users can work with the audited data directly, add additional filtering, browse/search shown results and details on each operation, forensically examine row history and more

Detailed information on exporting options and related features of ApexSQL Log, SQL log analyzer can be found in Exporting options in ApexSQL Log article.

And here are most commonly used auditing solutions

- How to continuously audit transaction log file data directly into a SQL Server database

- Read a SQL Server transaction log

- Open LDF file and view LDF file content

- Audit SQL Server database schema changes

- Audit SQL Server database security changes

Recovery

Our SQL Log Analyzer comes with powerful recovery mechanisms which allow operations rollback and recovery from all inadvertent or malicious data and structure changes. Leveraging information included in the transaction log files, ApexSQL Log creates historic overview of changes and creates recovery scripts which can be used to return table values or structure to the original state.

- Recovery process starts in the same way as the previously demonstrated auditing process. As before, we first connect to the SQL Server database and provide transaction logs to audit

-

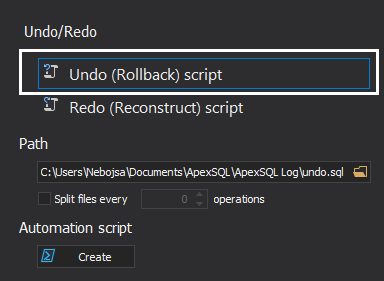

In the output selection, in case of the recovery process, users should opt for Undo/Redo option

- As before, all filters available in the auditing process can also be used during the recovery wizard

-

In the last step, we need to opt for the “Undo (Rollback) script” option

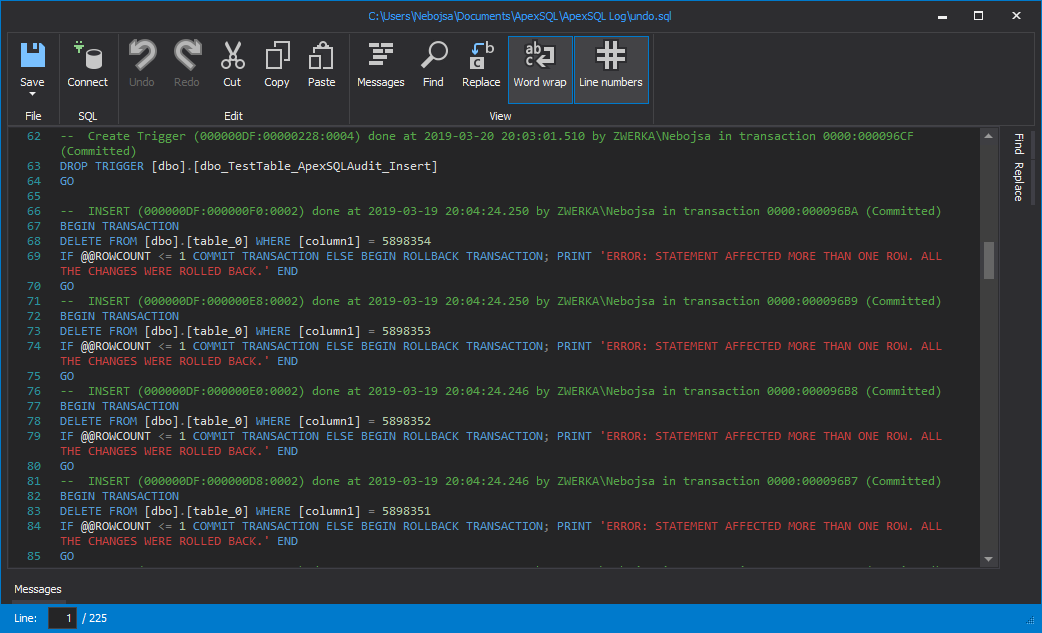

- Once the processing is completed, ApexSQL Log will generate a recovery script for the recovery purpose

- Generated recovery script can be opened in any SQL script editor, including but not limited to SQL Server Management Studio, or ApexSQL Log internal editor. Here, the script can be examined, edited and immediately executed to complete the recovery process and have damaged/lost data and structure in their original state

Here are some related articles on using ApexSQL Log recovery feature:

- How to recover SQL Server data from accidental UPDATE and DELETE operations

- Recover deleted SQL data from transaction logs

- Recover a SQL Server database using an old backup and the current transaction log file

- Recover a SQL Server database using only a transaction log file (.ldf) and old backup files

- How to recover views, stored procedures, functions, and triggers

Replication

Transactional replication is another prized feature of ApexSQL Log. ApexSQL Log can be used to read the information on all DML and DDL changes from the transaction log files, and to generate a “replay” script which can be applied to a subscriber database to mimic the changes and ensure that it is in sync with the original database. Furthermore, this process can be automated using batch or PowerShell.

- As was the case with two previously described use cases, replication process follows the same wizard, all the way until the output choice, where we again opt for the Undo/Redo option

- After that, the next step in line is to choose the “Redo” option and set the appropriate filters. When performing a transactional replication, it is important to ensure that this job is performed continuously and without duplicating operations/entries, so in any ongoing replication jobs, the best choice would be to use “Continuous auditing” option which will always start and pick up right where the previous replication job ended and ensure uninterrupted replication

-

To complete the replication job, configured project can be saved or batch/PowerShell script can be automatically generated and later automated to ensure replication is performed on regular pre-determined intervals

Several approaches and solutions for transactional replications can be found in the following articles:

- Hands free, no-coding SQL Server database replication of a reporting database

- How to setup SQL Server database replication for a reporting server

- How to set up a DDL and DML SQL Server database transactional replication solution

- How to setup custom SQL Server transactional replication with a central publisher and multiple subscriber databases

May 7, 2019